Aligning Global Threats and Opportunities via AI Governance: A Commentary Series

This is the first post in a new EGG commentary series exploring how AI’s development is affecting economic, social and political decision-making around the world. Laura Mahrenbach explores AI as opportunity and threat, and argues effective governance is the linchpin to close the gap between the two narratives.

Artificial intelligence (AI) is here to stay. Historically, the complicated technology and high costs affiliated with these technologies have meant that AI was depicted as something from the realm of science fiction – or at least something separate from everyday life. Yet AI is increasingly crucial to daily decision-making and activities, whether we are aware of it or not.

For example, GoogleMaps uses AI to improve road safety on its proposed routes and to generate accurate maps for pedestrians and bicyclists. Similarly, business applications of AI are numerous, including customer service chatbots, e-mail spam filters, and tools for tailoring suggestions to user preferences. Meanwhile, government buildings around the world use AI-based facial recognition for security purposes and doctors use machine learning to diagnose diseases and personalize treatment.

In this context, two narratives tend to shape our understandings of how AI may impact social relations. One narrative focuses on the opportunities offered by AI and related digital technologies. As evident above, private actors are developing commercial uses for AI technology which improve patient care, enhance global communication and keep your floors clean. Governments see opportunities as well. Meetings like the International Telecommunication Union’s annual AI for Good Summit generate ideas about how AI can be used to achieve socially beneficial goals and facilitate the private-public connections necessary to enact these ideas. Similarly, governments around the world are also forging ahead alone, with the Global AI Index illustrating both the worldwide commitment to funding AI-enabled innovation and the disparity among nations in their capacity to do so.

Another narrative exists as well: AI as a threat. The threat narrative tends to be interlinked with an inter-state, geopolitical-strategic competition approach to technological development. While the dual-use capacity of AI provides ample incentives for private actors to push innovations in AI, competition to secure a limited supply of top AI research talent and to shape rules governing acceptable AI use ensures growing government involvement in setting the tone – and funding – AI innovations. Part of the threat posed by AI refers to its military uses. These have been (violently) on display following Russia’s invasion of the Ukraine, raising concerns that, in using autonomous weapons, we inhibit our capacity to protect human lives without using AI technology. Yet threats are evident beyond the battlefield as well. The EU’s proposed AI Act, for instance, discusses the threats posed by AI to employment, access to public services, privacy, human rights and judicial procedures across Europe, among others.

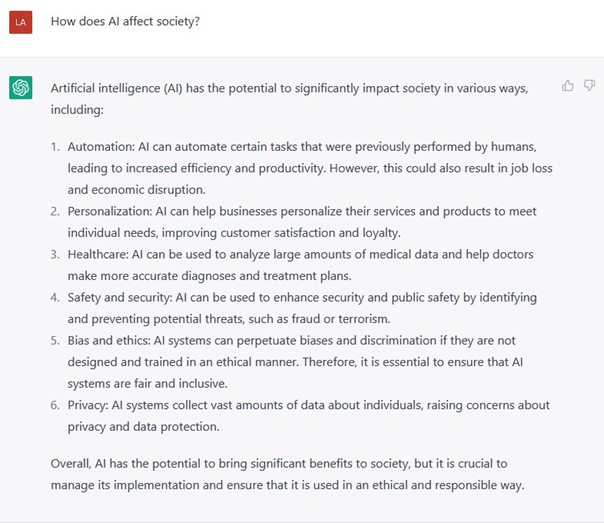

Effective governance is the linchpin necessary to address and align solutions to these two narratives. As ChatGPT noted (when asked by me), “AI has the potential to bring significant benefits to society, but it is crucial to manage its implementation and ensure that it is used in an ethical and responsible way” (see Figure 1). This may not be easy. One issue involves convincing governments to agree to rules which could put them at a geopolitical, economic or military disadvantage. Another involves the domestic consequences of regulations for private and societal actors, which can hinder political agreement despite publics largely remaining silent on these topics. A related issue involves identifying which definition(s) of AI are relevant for achieving intended regulatory goals. And then there is the question of where to regulate AI.

Figure 1. ChatGPT on how AI affects society (question posed on February 27, 2023)

Existing attempts to govern AI technologies reflect these difficulties. The first Responsible AI in the Military summit in the Netherlands in February 2023 was criticized as a missed opportunity for US (and Chinese) leadership, where states agreed to develop AI “responsibly” but did not define what responsible use is. While European businesses welcome the regulatory clarifications accompanying the EU’s AI Act, which is expected to come into force by the end of 2023, survey data suggests they also fear its implementation will harm the competitiveness of Europe’s AI industry. Previous attempts to regulate the use of autonomous weapons have been repeatedly stopped due to disagreements over what “autonomous” means in practice.

Clearly, exploring both the social implications and governance opportunities (domestic and international) afforded by AI is both timely and necessary. Over the next few weeks, the contributors to this commentary series will illustrate the interaction of both in diverse issue areas (e.g., economy, environment, culture, etc.) and governance settings (e.g., national, regional, global). My interview partners, in turn, provide insights into how these issues interact with goal development and implementation involving AI technologies in the private and non-profit sectors.

To maximize the breadth of our discussions, I adopt a broad definition of AI in this series, namely, “technology that automatically detects patterns in data, and makes predictions on the basis of them.” I also acknowledge that technological progress may ultimately demand a re-evaluation of this definition. For instance, governance discussion of autonomous transportation systems call for “humans in the loop,” essentially conceptualizing humans and technologies in a partnership as opposed to inventor-invention or governor-governed.

I and the contributors to this series look forward to such developments and to stimulating interesting discussions of this technology and its governance via this commentary series, both here and in the future. We also welcome your feedback and engagement, so please feel free to contact us via social media and email.

Laura Mahrenbach is an adjunct professor at the School of Social Sciences and Technology at the Technical University of Munich. Her research explores how global power shifts and technology interact, with a special focus on the implications of this interaction for the countries of the Global South as well as related governance dilemmas at the national and global levels. More information available at www.mahrenbach.com. This work was supported by Deutsche Forschungsgemeinschaft (Grant No. 3698966954).

This work was supported by Deutsche Forschungsgemeinschaft (Grant No. 3698966954).

Photo by Tara Winstead